burekijogurt

Starter

Where are you getting your data? ...

From this thread... was just going off of what Captain Factorial posted. He did 2000-2015...

Where are you getting your data? ...

Okay well now you have the complete data set.From this thread... was just going off of what Captain Factorial posted. He did 2000-2015...

That's what you're refusing to understand. People can and do have any conversations they like, provided they fall within the guidelines of our FAQ. Whether you choose to participate in a discussion is your choice, NOT what conversations we should or should not be having.

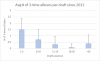

Took the liberty of making a graphic (I like graphsHere are the numbers with 3 time all stars by draft year. It starts in 2012 to give players a chance to earn them.

3 Time all stars 1-5 6-10 11-15 16-20 >20 Total

Average 1.49 0.71 0.32 0.09 0.38 3.00

Standard Deviation 0.8965 0.7790 0.6588 0.2895 0.6949 1.4884

Mode 1 0 0 0 0 3

Max 4 2 3 1 3 7

Took the liberty of making a graphic (I like graphs

Although 6 years is a small sample, looks like there is no statistical significance for getting 3XAllstars in the 1-15 seeds. Good news for the anti-tank crowd. *The >20 bin does not have the same sample size so significance can't be used there.

View attachment 7469

Twice as likely isn't statistically significant? By what measure do you infer statistically significant?

Buying 2 lottery tickets doubles your odds of winning but it does not meaningfully change your likely-hood of actually being the winner. Hence why every study must define significance and contextualize it.Twice as likely isn't statistically significant? By what measure do you infer statistically significant? By the way the universe of data points is the same for all groupings so I am not sure what your comment on greater than 20 means.

Considering the 3rd Quartile (assuming a box and whiskers) lands on 1.5, and has a max of 2.4ish I would say that it might be useful to pick a different argument. Out of the 35 players selected in that range, your 3rd quartile ends at 1.5. That's not a big number, statistically speaking.

Not sure what number would make it statistically significant - if 1/5 of the 35 were three time all stars, then that would put the appearance at 5. Given that it's such a low number, and not even one whole value greater than the next stanine (again, not sure how it's being measured, but that's how I'm perceiving it) from 6-10, it really isn't a good argument. I think that's the main point.

Buying 2 lottery tickets doubles your odds of winning but it does not meaningfully change your likely-hood of actually being the winner. Hence why every study must define significance and contextualize it.

No it is not. Math is the part of statistics that machines do. Interperting the results is what the human mind and imagination is for.Correct because one lottery ticket is 1 in 300M versus 2 in 300M which is not a statistically significant difference. 1.5 in 5 versus .7 in 5 is hugely different.

It’s just math guys.

Low p-values show significance, not high ones. But maybe you're referring to something else.Statistical significance is a percentage. Generally something in the 3-5% range is the typical margin for error. In this case it is over a 100% difference. Not only is it statistically significant it is an exponential relationship.

Took the liberty of making a graphic (I like graphs

Although 6 years is a small sample, looks like there is no statistical significance for getting 3XAllstars in the 1-15 seeds. Good news for the anti-tank crowd. *The >20 bin does not have the same sample size so significance can't be used there.

View attachment 7469

Considering the 3rd Quartile (assuming a box and whiskers) lands on 1.5, and has a max of 2.4ish I would say that it might be useful to pick a different argument. Out of the 35 players selected in that range, your 3rd quartile ends at 1.5. That's not a big number, statistically speaking.

Statistical significance is a percentage. Generally something in the 3-5% range is the typical margin for error. In this case it is over a 100% difference. Not only is it statistically significant it is an exponential relationship.

This is a question that I have, how important is the "draft year?"Buying 2 lottery tickets doubles your odds of winning but it does not meaningfully change your likely-hood of actually being the winner. Hence why every study must define significance and contextualize it.

No it is not. Math is the part of statistics that machines do. Interperting the results is what the human mind and imagination is for.

As several have mentioned, significance isn't exact. A crude rule of thumb is that when standard errors overlap, the difference between the means for the two groups is not significant.

Hmmmm I have a masters of science and we have always done this during literature analysis. Not a statician tho just biology lol XDThat is indeed a crude rule of thumb. It is important to note that there is a major difference between standard error and standard deviation - and standard deviation is what is depicted above, so the overlap is not terribly meaningful.

Whoa, whoa, whoa, whoa, whoa, guys. We've got all kinds of things going sideways in this discussion and we need a little bit of a reset.

When sactowndog said "starting" in 2012, I'm certain he meant starting in 2012 and going *backwards* in time - players from 2013 and onwards have very little chance of being 3-time All-Stars (and from 2016/2017 have *no* chance of being three-time All-Stars yet) due to their short time in the league. So the sample size here is not 6 years. I can't say how far back sactowndog went, but I'm going to guess the sample size was quite a bit larger than 6 years.

I believe the "not the same sample size" comment is actually talking about the fact that there are 40 picks per year in the >20 bin compared to 5 picks per year in the other bins. While that does mean that the number in that bin does not correspond to the probability of getting a 3x All-Star *per pick*, such that the raw value as listed (somewhere around 0.4 3x All-Stars per draft taken after pick 20) does not compare per pick to the other bins, there's nothing that would prevent comparison of those numbers. The question "Are there more 3x All-Stars drafted 1-5 than drafted 21-60" is a perfectly answerable question, but it's probably not exactly the question most are interested in.

These aren't box-and-whisker plots, they are a simple bar graph with an error bar indicating the standard deviation. Quartiles are not indicated. Overlap of standard deviation is not a very good way of assessing statistical significance. In fact it's relatively easy to have two distributions that are statistically-significantly different even though they have overlapping standard deviations - it's really a matter of sample size.

Statistical significance is a concept. The fundamental idea is that if we have observed two (or more) populations, and we find that these populations have different averages, we want to know how confident we are that the two populations are actually different - as opposed to the differences just being accidental. Statistical significance is expressed in terms of a p-value, which is an estimate of the probability that the populations that we see and we think are different could have, by chance, been randomly drawn from a single underlying distribution, and still ended up that different. P-values are most often calculated by a statistical test such as a Student's T-Test or an ANOVA (though there are other methods) - they are not simply reflections of the percentage difference between the averages. For many scientific purposes, a p-value of 0.05 or lower (meaning that there is an estimated 5% or lower probability that the two observed populations could have been drawn from a single population) is used as a threshold for declaring confidence that the result is "real". Results that have p-values of 0.05 or lower are often colloquially referred to as "statistically significant", indicating a high confidence that the two observations (technically the distributions underlying the two observations) are in fact different.

Without having the actual data in hand, it's not possible to perform a proper statistical test. However, given my long experience performing statistical tests on wide varieties of data, and having an offhand look at the data presented, combined with the belief that the data set probably spans 20 years or more, my best guess is that if a proper statistical test were performed that the probability of finding a 3x All-Star with a pick from 1-5 would be "statistically significantly" greater than the probability of finding one with a pick from 6-10.

Hmmmm I have a masters of science and we have always done this during literature analysis. Not a statician tho just biology lol XD

also just googled it real quick, but seems to contradict

stand·ard er·ror

noun

STATISTICS

- a measure of the statistical accuracy of an estimate, equal to the standard deviation of the theoretical distribution of a large population of such estimates.

This is a question that I have, how important is the "draft year?"

In McClemore's draft year, a top pick was crucial. It was a weak draft. In this coming one, I've heard it said picks 4 to ~8 or 10 there isn't much difference. And most of these players are in positions of need. Other than taking one of the leftovers, is this a year that it might not make as much a difference? I don't follow college ball enough to make any educated guess. Context and things like that.

As several have mentioned, significance isn't exact. A crude rule of thumb is that when standard errors overlap, the difference between the means for the two groups is not significant.

The definition as listed is right, but you're not interpreting it correctly. It doesn't say that the standard error is equal to the standard deviation. Let me make this more concrete:

Let's imagine that we want to know something about the real-world distribution of the height of 18-year-old men living in the United States. There is a real-world distribution of these heights, but it is difficult to get exactly, because there are a lot of members of this population (probably more than 2 million by a simple estimate), and worse, the membership of this population is changing every day (17-year olds becoming 18-year olds, 18-year olds becoming 19-year olds). Fortunately, we can do a very good job of estimating this distribution by sampling, say, a randomly-selected N 18-years olds. So we have a "population distribution" (with millions of members), and we have N samples from this distribution which comprise a "sampling distribution". We do all of our statistics on the sampling distribution, but we are really hoping that the statistics that we calculate on the sampling distribution also apply to the population distribution, because that's the real-world thing we care about.

The standard deviation is a measure of the amount of spread in the sampling distribution. You can use the standard deviation to help estimate how many members of your population are above or below a certain height, for instance. Obviously another measurement we calculate on the sampling distribution is the mean - often this is the measurement of most interest. Now one thing we often want to know is how good our estimate of the population mean is, given that we got it from a sampling distribution. So what we could imagine doing (as a thought experiment) is to collect N samples from this population a large number of times (say X times), and to calculate the mean of each of these X sampling distributions. Now, we have a new distribution, but it is a distribution of MEAN heights rather than a distribution of heights (which is what we have in each individual sampling distribution). And the standard deviation of that distribution of MEANS is the standard error. The standard error, in effect, tells you how confident you are that the mean of your sampling distribution matches the true population mean.

Fortunately, we don't have to actually go through all that business of the thought experiment to calculate the standard error - it is simply the standard deviation divided by the square root of N.

Note that as your sampling distribution gets larger (i.e. as N goes up) the standard deviation tends to stabilize - but the standard error continues to drop.

OK, thus endeth the statistics lesson.

The definition as listed is right, but you're not interpreting it correctly. It doesn't say that the standard error is equal to the standard deviation. Let me make this more concrete:

Let's imagine that we want to know something about the real-world distribution of the height of 18-year-old men living in the United States. There is a real-world distribution of these heights, but it is difficult to get exactly, because there are a lot of members of this population (probably more than 2 million by a simple estimate), and worse, the membership of this population is changing every day (17-year olds becoming 18-year olds, 18-year olds becoming 19-year olds). Fortunately, we can do a very good job of estimating this distribution by sampling, say, a randomly-selected N 18-years olds. So we have a "population distribution" (with millions of members), and we have N samples from this distribution which comprise a "sampling distribution". We do all of our statistics on the sampling distribution, but we are really hoping that the statistics that we calculate on the sampling distribution also apply to the population distribution, because that's the real-world thing we care about.

The standard deviation is a measure of the amount of spread in the sampling distribution. You can use the standard deviation to help estimate how many members of your population are above or below a certain height, for instance. Obviously another measurement we calculate on the sampling distribution is the mean - often this is the measurement of most interest. Now one thing we often want to know is how good our estimate of the population mean is, given that we got it from a sampling distribution. So what we could imagine doing (as a thought experiment) is to collect N samples from this population a large number of times (say X times), and to calculate the mean of each of these X sampling distributions. Now, we have a new distribution, but it is a distribution of MEAN heights rather than a distribution of heights (which is what we have in each individual sampling distribution). And the standard deviation of that distribution of MEANS is the standard error. The standard error, in effect, tells you how confident you are that the mean of your sampling distribution matches the true population mean.

Fortunately, we don't have to actually go through all that business of the thought experiment to calculate the standard error - it is simply the standard deviation divided by the square root of N.

Note that as your sampling distribution gets larger (i.e. as N goes up) the standard deviation tends to stabilize - but the standard error continues to drop.

OK, thus endeth the statistics lesson.

You are making my head hurt.

Wow you are quite knowledgeable and thorough! ThxThe definition as listed is right, but you're not interpreting it correctly. It doesn't say that the standard error is equal to the standard deviation. Let me make this more concrete:

Let's imagine that we want to know something about the real-world distribution of the height of 18-year-old men living in the United States. There is a real-world distribution of these heights, but it is difficult to get exactly, because there are a lot of members of this population (probably more than 2 million by a simple estimate), and worse, the membership of this population is changing every day (17-year olds becoming 18-year olds, 18-year olds becoming 19-year olds). Fortunately, we can do a very good job of estimating this distribution by sampling, say, a randomly-selected N 18-years olds. So we have a "population distribution" (with millions of members), and we have N samples from this distribution which comprise a "sampling distribution". We do all of our statistics on the sampling distribution, but we are really hoping that the statistics that we calculate on the sampling distribution also apply to the population distribution, because that's the real-world thing we care about.

The standard deviation is a measure of the amount of spread in the sampling distribution. You can use the standard deviation to help estimate how many members of your population are above or below a certain height, for instance. Obviously another measurement we calculate on the sampling distribution is the mean - often this is the measurement of most interest. Now one thing we often want to know is how good our estimate of the population mean is, given that we got it from a sampling distribution. So what we could imagine doing (as a thought experiment) is to collect N samples from this population a large number of times (say X times), and to calculate the mean of each of these X sampling distributions. Now, we have a new distribution, but it is a distribution of MEAN heights rather than a distribution of heights (which is what we have in each individual sampling distribution). And the standard deviation of that distribution of MEANS is the standard error. The standard error, in effect, tells you how confident you are that the mean of your sampling distribution matches the true population mean.

Fortunately, we don't have to actually go through all that business of the thought experiment to calculate the standard error - it is simply the standard deviation divided by the square root of N.

Note that as your sampling distribution gets larger (i.e. as N goes up) the standard deviation tends to stabilize - but the standard error continues to drop.

OK, thus endeth the statistics lesson.

The definition as listed is right, but you're not interpreting it correctly. It doesn't say that the standard error is equal to the standard deviation. Let me make this more concrete:

Let's imagine that we want to know something about the real-world distribution of the height of 18-year-old men living in the United States. There is a real-world distribution of these heights, but it is difficult to get exactly, because there are a lot of members of this population (probably more than 2 million by a simple estimate), and worse, the membership of this population is changing every day (17-year olds becoming 18-year olds, 18-year olds becoming 19-year olds). Fortunately, we can do a very good job of estimating this distribution by sampling, say, a randomly-selected N 18-years olds. So we have a "population distribution" (with millions of members), and we have N samples from this distribution which comprise a "sampling distribution". We do all of our statistics on the sampling distribution, but we are really hoping that the statistics that we calculate on the sampling distribution also apply to the population distribution, because that's the real-world thing we care about.

The standard deviation is a measure of the amount of spread in the sampling distribution. You can use the standard deviation to help estimate how many members of your population are above or below a certain height, for instance. Obviously another measurement we calculate on the sampling distribution is the mean - often this is the measurement of most interest. Now one thing we often want to know is how good our estimate of the population mean is, given that we got it from a sampling distribution. So what we could imagine doing (as a thought experiment) is to collect N samples from this population a large number of times (say X times), and to calculate the mean of each of these X sampling distributions. Now, we have a new distribution, but it is a distribution of MEAN heights rather than a distribution of heights (which is what we have in each individual sampling distribution). And the standard deviation of that distribution of MEANS is the standard error. The standard error, in effect, tells you how confident you are that the mean of your sampling distribution matches the true population mean.

Fortunately, we don't have to actually go through all that business of the thought experiment to calculate the standard error - it is simply the standard deviation divided by the square root of N.

Note that as your sampling distribution gets larger (i.e. as N goes up) the standard deviation tends to stabilize - but the standard error continues to drop.

OK, thus endeth the statistics lesson.